- INSTALL APACHE SPARK ZEPPELIN INSTALL

- INSTALL APACHE SPARK ZEPPELIN GENERATOR

- INSTALL APACHE SPARK ZEPPELIN CODE

The Overflow Blog Getting through a SOC 2 audit with your nerves intact (Ep.

INSTALL APACHE SPARK ZEPPELIN INSTALL

Install Hadoop 3.0.0 in Windows (Single Node) Install Spark 2.2.1 in Windows. Browse other questions tagged apache-spark pyspark apache-zeppelin or ask your own question.

INSTALL APACHE SPARK ZEPPELIN CODE

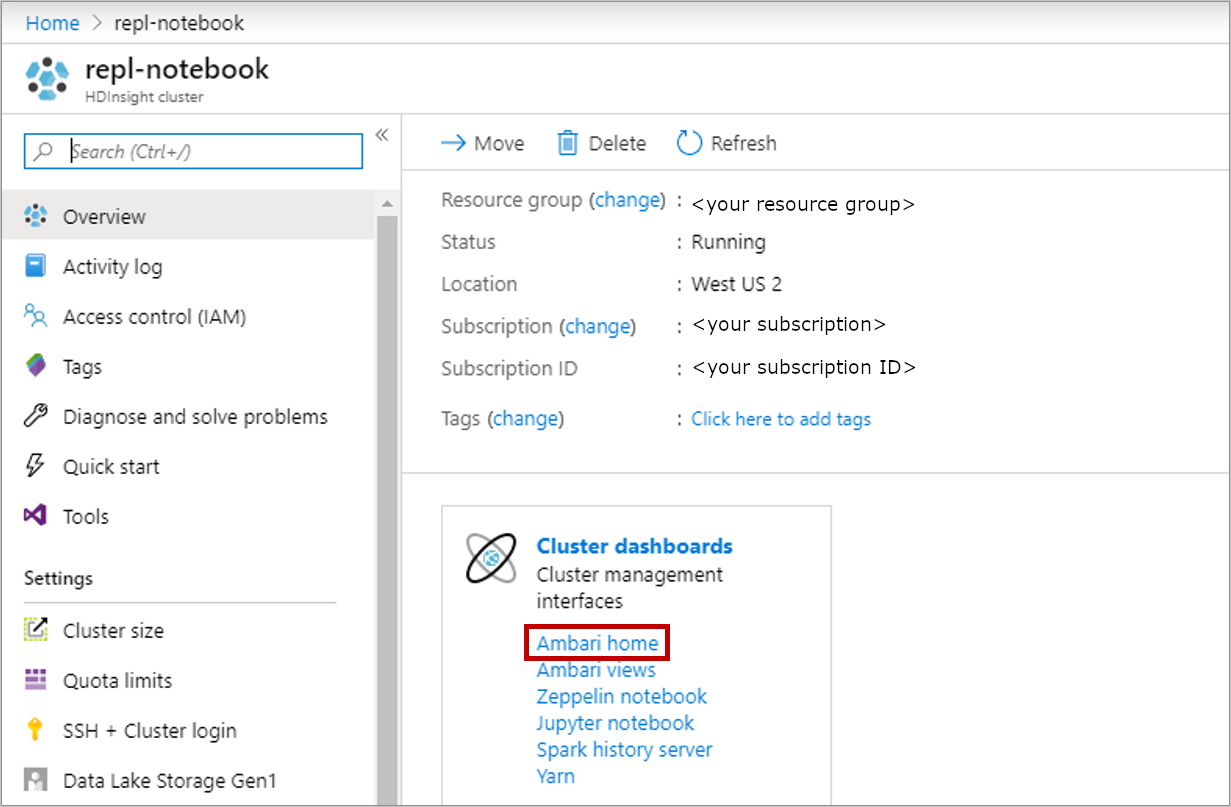

And, to install, you might have guessed by now: code langshell brew install apache-zeppelin. I can see that we can use the script action during the creation of the cluster, but what is the way to enable it once the cluster is already available. The steps should be the same in other Windows environments though some of the screenshots may be different. Explicitly there are no dependencies specified in the brew formula, but it’s good to at least have Apache Spark installed to validate the install by using the Spark interpreter. DBR 7 matches with Sedona 1.1.0-incubating and DBR 9 matches better with Sedona 1.1.1-incubating due to Databricks cherry-picking some Spark 3.2 private APIs. I have installed an HDInsight Spark cluster on my Azure resource group, but that does not have the R-Kernel nor the Zeppelin Notebook installed.

Sedona 1.1.1-incubating is overall the recommended version to use.

INSTALL APACHE SPARK ZEPPELIN GENERATOR

Any SQL functions that rely on Generator class may have issues if compiled for a runtime with a differing spark version. In Spark 3.2. class added a field nodePatterns. Sedona 1.1.1 is compiled against Spark 3.2 (~ DBR 10 & 11).It provides you a safe environment to get insigth of your data. Currently Apache Zeppelin supports many interpreters such as Apache Spark, Apache Flink, Python, R, JDBC, Markdown and Shell. This corresponds to the value of ZHOME in the Docker file of the. Apache Zeppelin interpreter concept allows any language/data-processing-backend to be plugged into Zeppelin.

line 33: ZEPPELINHOME should be set to /zeppelin. Sedona 1.0.1 & 1.1.0 is compiled against Spark 3.1 (~ Databricks DBR 9 LTS, DBR 7 is Spark 3.0) To get the Zeppelin deployment running using the config file from the Zeppelin homepage, try to change three lines in the config file: line 32: the name of the published image is apache/zeppelin:0.9.0.You just need to install the Sedona jars and Sedona Python on Databricks using Databricks default web UI. Install on Databricks Community edition (free-tier) ¶ Install Sedona via init script (for DBRs > 7.3)

0 kommentar(er)

0 kommentar(er)